Classification and Regression using the Ensemble of ODT-based Boosting Trees

Source:R/ODBT.R

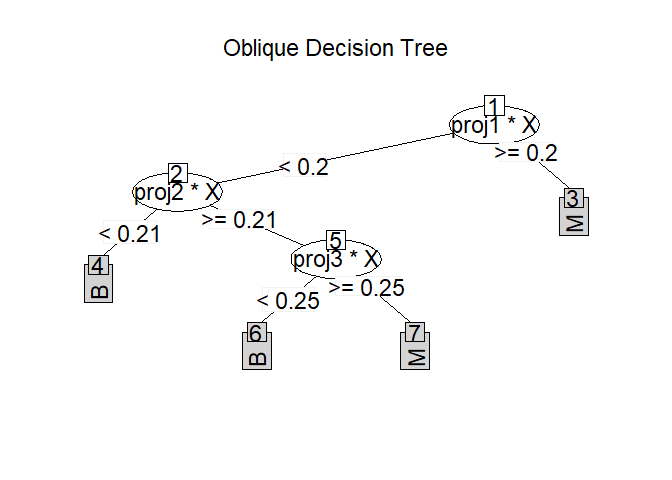

ODBT.RdWe use ODT as the basic tree model (base learner). To improve the performance of a boosting tree, we apply the feature bagging in this process, in the same

way as the random forest. Our final estimator is called the ensemble of ODT-based boosting trees, denoted by ODBT, is the average of many boosting trees.

Usage

ODBT(X, ...)

# S3 method for class 'formula'

ODBT(

formula,

data = NULL,

Xnew = NULL,

type = "auto",

model = c("ODT", "rpart", "rpart.cpp")[1],

TreeRotate = TRUE,

max.terms = 30,

NodeRotateFun = "RotMatRF",

FunDir = getwd(),

paramList = NULL,

ntrees = 100,

storeOOB = TRUE,

replacement = TRUE,

stratify = TRUE,

ratOOB = 0.368,

parallel = TRUE,

numCores = Inf,

MaxDepth = Inf,

numNode = Inf,

MinLeaf = ceiling(sqrt(ifelse(replacement, 1, 1 - ratOOB) * ifelse(is.null(data),

length(eval(formula[[2]])), nrow(data)))/3),

subset = NULL,

weights = NULL,

na.action = na.fail,

catLabel = NULL,

Xcat = 0,

Xscale = "No",

...

)

# Default S3 method

ODBT(

X,

y,

Xnew = NULL,

type = "auto",

model = c("ODT", "rpart", "rpart.cpp")[1],

TreeRotate = TRUE,

max.terms = 30,

NodeRotateFun = "RotMatRF",

FunDir = getwd(),

paramList = NULL,

ntrees = 100,

storeOOB = TRUE,

replacement = TRUE,

stratify = TRUE,

ratOOB = 0.368,

parallel = TRUE,

numCores = Inf,

MaxDepth = Inf,

numNode = Inf,

MinLeaf = ceiling(sqrt(ifelse(replacement, 1, 1 - ratOOB) * length(y))/3),

subset = NULL,

weights = NULL,

na.action = na.fail,

catLabel = NULL,

Xcat = 0,

Xscale = "No",

...

)Arguments

- X

An n by d numeric matrix (preferable) or data frame.

- ...

Optional parameters to be passed to the low level function.

- formula

Object of class

formulawith a response describing the model to fit. If this is a data frame, it is taken as the model frame. (seemodel.frame)- data

Training data of class

data.framecontaining variables named in the formula. Ifdatais missing it is obtained from the current environment byformula.- Xnew

An n by d numeric matrix (preferable) or data frame containing predictors for the new data.

- type

Use

ODBTfor classification ("class") or regression ("reg").'auto' (default): If the response indataoryis a factor, "class" is used, otherwise regression is assumed.- model

The basic tree model for boosting. We offer three options: "ODT" (default), "rpart" and "rpart.cpp" (improved "rpart").

- TreeRotate

If or not to rotate the training data with the rotation matrix estimated by logistic regression before building the tree (default TRUE).

- max.terms

The maximum number of iterations for boosting trees.

- NodeRotateFun

Name of the function of class

characterthat implements a linear combination of predictors in the split node. including"RotMatPPO": projection pursuit optimization model (

PPO), seeRotMatPPO(default, model="PPR")."RotMatRF": single feature similar to Random Forest, see

RotMatRF."RotMatRand": random rotation, see

RotMatRand."RotMatMake": users can define this function, for details see

RotMatMake.

- FunDir

The path to the

functionof the user-definedNodeRotateFun(default current working directory).- paramList

List of parameters used by the functions

NodeRotateFun. If left unchanged, default values will be used, for details seedefaults.- ntrees

The number of trees in the forest (default 100).

- storeOOB

If TRUE then the samples omitted during the creation of a tree are stored as part of the tree (default TRUE).

- replacement

if TRUE then n samples are chosen, with replacement, from training data (default TRUE).

- stratify

If TRUE then class sample proportions are maintained during the random sampling. Ignored if replacement = FALSE (default TRUE).

- ratOOB

Ratio of 'out-of-bag' (default 1/3).

- parallel

Parallel computing or not (default TRUE).

- numCores

Number of cores to be used for parallel computing (default

Inf).- MaxDepth

The maximum depth of the tree (default

Inf).- numNode

Number of nodes that can be used by the tree (default

Inf).- MinLeaf

Minimal node size (Default 5).

- subset

An index vector indicating which rows should be used. (NOTE: If given, this argument must be named.)

- weights

Vector of non-negative observational weights; fractional weights are allowed (default NULL).

- na.action

A function to specify the action to be taken if NAs are found. (NOTE: If given, this argument must be named.)

- catLabel

A category labels of class

listin predictors. (default NULL, for details see Examples)- Xcat

A class

vectoris used to indicate which predictor is the categorical variable, the defaultXcat=0 means that no special treatment is given to category variables. When Xcat=NULL, the predictor x that satisfies the condition (length(unique(x))<10) & (n>20) is judged to be a category variable.- Xscale

Predictor standardization methods. " Min-max" (default), "Quantile", "No" denote Min-max transformation, Quantile transformation and No transformation respectively.

- y

A response vector of length n.

Value

An object of class ODBT Containing a list components:

call: The original call to ODBT.terms: An object of classc("terms", "formula")(seeterms.object) summarizing the formula. Used by various methods, but typically not of direct relevance to users.ppTrees: Each tree used to build the forest.oobErr: 'out-of-bag' error for tree, misclassification rate (MR) for classification or mean square error (MSE) for regression.oobIndex: Which training data to use as 'out-of-bag'.oobPred: Predicted value for 'out-of-bag'.other: For other tree related valuesODT.

oobErr: 'out-of-bag' error for forest, misclassification rate (MR) for classification or mean square error (MSE) for regression.oobConfusionMat: 'out-of-bag' confusion matrix for forest.split,LevelsandNodeRotateFunare important parameters for building the tree.paramList: Parameters in a named list to be used byNodeRotateFun.data: The list of data related parameters used to build the forest.tree: The list of tree related parameters used to build the tree.forest: The list of forest related parameters used to build the forest.results: The prediction results for new dataXnewusingODBT.

References

Zhan, H., Liu, Y., & Xia, Y. (2024). Consistency of Oblique Decision Tree and its Boosting and Random Forest. arXiv preprint arXiv:2211.12653.

Tomita, T. M., Browne, J., Shen, C., Chung, J., Patsolic, J. L., Falk, B., ... & Vogelstein, J. T. (2020). Sparse projection oblique randomer forests. Journal of machine learning research, 21(104).

Examples

# Classification with Oblique Decision Tree.

data(seeds)

set.seed(221212)

train <- sample(1:209, 100)

train_data <- data.frame(seeds[train, ])

test_data <- data.frame(seeds[-train, ])

# \donttest{

forest <- ODBT(varieties_of_wheat ~ ., train_data, test_data[, -8],

model = "rpart",

type = "class", parallel = FALSE, NodeRotateFun = "RotMatRF"

)

pred <- forest$results$prediction

# classification error

(mean(pred != test_data[, 8]))

#> [1] 0.06422018

forest <- ODBT(varieties_of_wheat ~ ., train_data, test_data[, -8],

model = "rpart.cpp",

type = "class", parallel = FALSE, NodeRotateFun = "RotMatRF"

)

pred <- forest$results$prediction

# classification error

(mean(pred != test_data[, 8]))

#> [1] 0.06422018

# }

# Regression with Oblique Decision Randome Forest.

data(body_fat)

set.seed(221212)

train <- sample(1:252, 80)

train_data <- data.frame(body_fat[train, ])

test_data <- data.frame(body_fat[-train, ])

# To use ODT as the basic tree model for boosting, you need to set

# the parameters model = "ODT" and NodeRotateFun = "RotMatPPO".

# \donttest{

forest <- ODBT(Density ~ ., train_data, test_data[, -1],

type = "reg", parallel = FALSE, model = "ODT",

NodeRotateFun = "RotMatPPO"

)

pred <- forest$results$prediction

# estimation error

mean((pred - test_data[, 1])^2)

#> [1] 1.891391e-05

forest <- ODBT(Density ~ ., train_data, test_data[, -1],

type = "reg", parallel = FALSE, model = "rpart.cpp",

NodeRotateFun = "RotMatRF"

)

pred <- forest$results$prediction

# estimation error

mean((pred - test_data[, 1])^2)

#> [1] 3.227552e-05

# }